3 things a new DBA should learn first

Why would I put a post together about the 3 things a new DBA should learn first? Well, I was asked by a colleague what I thought she should be focusing on learning and mastering as newish SQL DBA. She was looking to become more experienced and confident in her role. Obviously this is an opinion piece but I felt it was worth sharing. The 3 suggestions are not in any particular order, and of course I’m sure not everyone will agree but it’s an opinion based on my own experience as a SQL DBA. (Feel free to disagree in the comments, I’m sure people will strongly about some item’s I’ve omitted)

- What is happening on the server right now

- Backup and restore of SQL Databases

- Scripting i.e T-SQL and PowerShell

What is happening on the SQL server right now

Help, the SQL Server is on fire and my really important process is not working. Why is the server so slow?

This scenario will happen to you. Invariably, the person (often a manager) will stand over you and expect you to knock out one or two lines of t-sql wizardry to get things running along smoothly again. First of all, I should say that in a perfect world, you will ask them to raise a ticket and you will work on it according to its priority against your other tasks. Then you can let them know what the issue was and what can be done to prevent it happening again. But we rarely work in a perfect world. In this scenario, you need one primary line of t-sql to get started.

EXEC sp_whoisactive

The procedure sp_whoisactive doesn’t come with SQL Server. It is a community script created by Adam Machanic. You can download it from GitHub. If you don’t have it installed on your SQL Servers, It is something your should really consider as it gives much more useful and readable information that sp_who2 and it’s a lot easier than pulling together your own code. It’s mature and very safe and has been installed on SQL Servers worldwide since 2007.

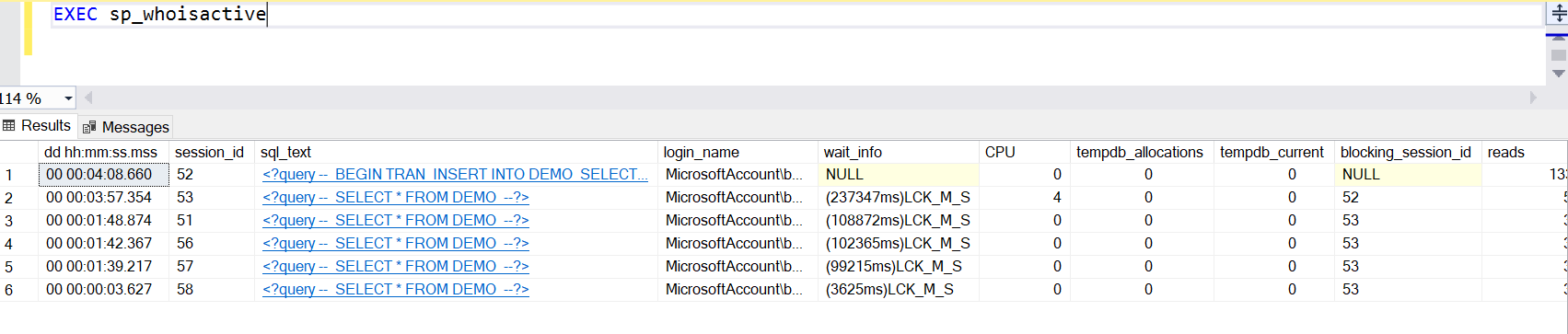

sp_whoisactive offers loads of optional parameters that allow you to customise and sort the output according to your own preference but just running the proc on its own without parameters will give you an ordered list of everything that is executing, at that point in time. (Ordered by duration descending). If you see things running in the minutes that usually take seconds, maybe you need to see if they are blocking other transactions.

One parameter I find really useful during an incident is @find_block_leaders. By running [sql]EXEC sp_whoisactive @find_block_leaders = 1[/sql] , you can see exactly how many sessions are being blocked from each blocking session. In the example below, you can see that an INSERT transaction in session_id 52 is blocking 5 other sessions. Each of these are trying to read from the table with an open insert transaction so they are blocked. You either need to wait for 52 to finish or you need to kill it in order for the other transactions to move on.

A quick note on killing spids. I really only recommend this if you know what the process is and you have an idea of how long it will take to rollback. (Remember those other 5 spids are still blocked until the rollback completes and this is a single threaded process)

Of course, it might not be blocking and in that case, you will need more scripts to analyse what is running regularly, how queries are performing and if any optimisations are needed. This will need to be in another blog as I want to keep this post fairly succinct.

Backup and restore of SQL Databases

Knowing how to back up a database is an essential skill of a DBA, certainly one of the top required skills. Equally important is knowing how to restore your database backups. This is something you should regularly practice to ensure that when/if the time comes that you need to act quickly, you are well rehearsed. Trying to restore a database to a point in time in the middle of a P1 emergency, is the stuff of nightmares if you haven’t done it before.

Learn the different types of backups available and how often you should be doing each of them. This will vary depending on your business needs. Even on the same instance, some databases may need point in time recovery and others wont. e.g. It might be fairly acceptable to back up your small master database once per day but you cannot afford to lose more than 5 minutes of orders in the event of your instance going down. In the case of your orders database, you will need a transaction log backup every 5 minutes. Depending on the size of your database, you will be looking at a combination of regular full and differential backups or just regular full backups (as well as your transaction log backups of course)

If you are on a PAAS database such as Azure SQL Database or AWS RDS, the backups can be done automatically without any administration effort but you will still want to practice restoring to a new instance, possibly into a new region or availability zone in the event of a disaster.

Other backup related topics to look into are compression, encryption, striping and retention management.

Scripting i.e T-SQL and PowerShell

This may be a strong opinion but I believe point and click DBAs are going the way of the dinosaurs. SQL Server Management Studio (SSMS) still makes it possible to do lots of work with knowing much T-SQL or PowerShell but this does not scale. If you end up managing tens or even hundreds of SQL Servers, scripting will be your friend. The more tedious work you can automate, the more time you have to work on more interesting tasks.

Learn T-SQL

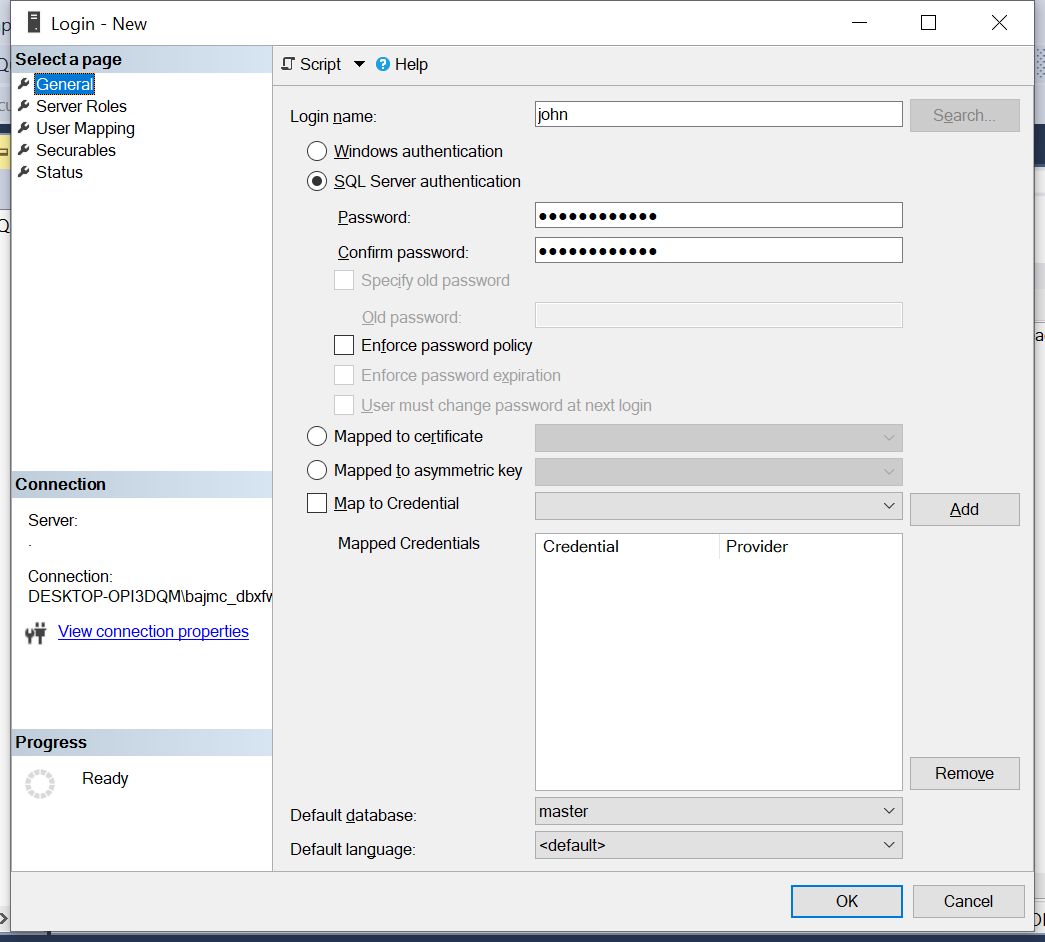

Despite me saying that point and click is not the way to go, SSMS is very useful for providing the T-SQL code for how to do something. Say I wanted to create a new sql login that doesn’t expire and I want to give it sysadmin permissions. The first time I do this, I can step through the GUI but instead of clicking OK, I can click Script and the code is opened out in SSMS for me to review and run. I can also save this as a .sql script and either use it as a template for creating future sql logins, or refer to it often enough that I learn the syntax.

USE [master] GO CREATE LOGIN [john] WITH PASSWORD=N'ae34bhijkfgcd5', DEFAULT_DATABASE=[master], CHECK_EXPIRATION=OFF, CHECK_POLICY=OFF GO ALTER SERVER ROLE [sysadmin] ADD MEMBER [john] GO

Learn Powershell

If this seems daunting, please don’t worry. There is a huge community project called dbatools that has more functionality for managing SQL Server than the official Microsoft SQL Server module. It’s a great way to start running Powershell commands and building your knowledge as you go.

You can start with commands as an when you need them and build up your knowledge from there. The website is extremely useful and by tagging @psdbatools on twitter, you will usually get help pretty quickly from someone involved. As your skills and confidence increase, you may even choose to add code to the project by making a pull request. As I write this post, dbatools has over 15,000 commits from 175 contributors. (I’m one of them in a VERY small way)

Summary

There are more than 3 things a new DBA should learn, a lot more but these 3 items will help you remain calm in a crisis and let you automate work, meaning you can be more efficient and have time to spend on interesting work.

Maintenance of SSISDB within SQL Server

Maintenance of SSISDB within SQL Server