Perfection is not the aim

Your SQL Server indexes can’t always be perfect. What I mean by that is you can’t index for every query on a busy SQL server. In this post I’m going to describe just one table from a server I have been tuning recently. It had 26 indexes, and that was not unusual for this database. This most likely happened over time as a result of developers creating an index per query using the table, as well as following some perceived best practices (with the best of intentions).

Did you say 26 indexes?

Yes, one of the heavily used tables on this database had 26 indexes. It had one clustered index on an ID column which is fairly standard. There were 25 further non clustered indexes including an index per foreign key. A shared code base runs across several customers. Some customer’s use all the functionality, some only use parts of it, but they all get the same database schema. And all of those indexes.

Current usage and how to fix

Remove unused indexes

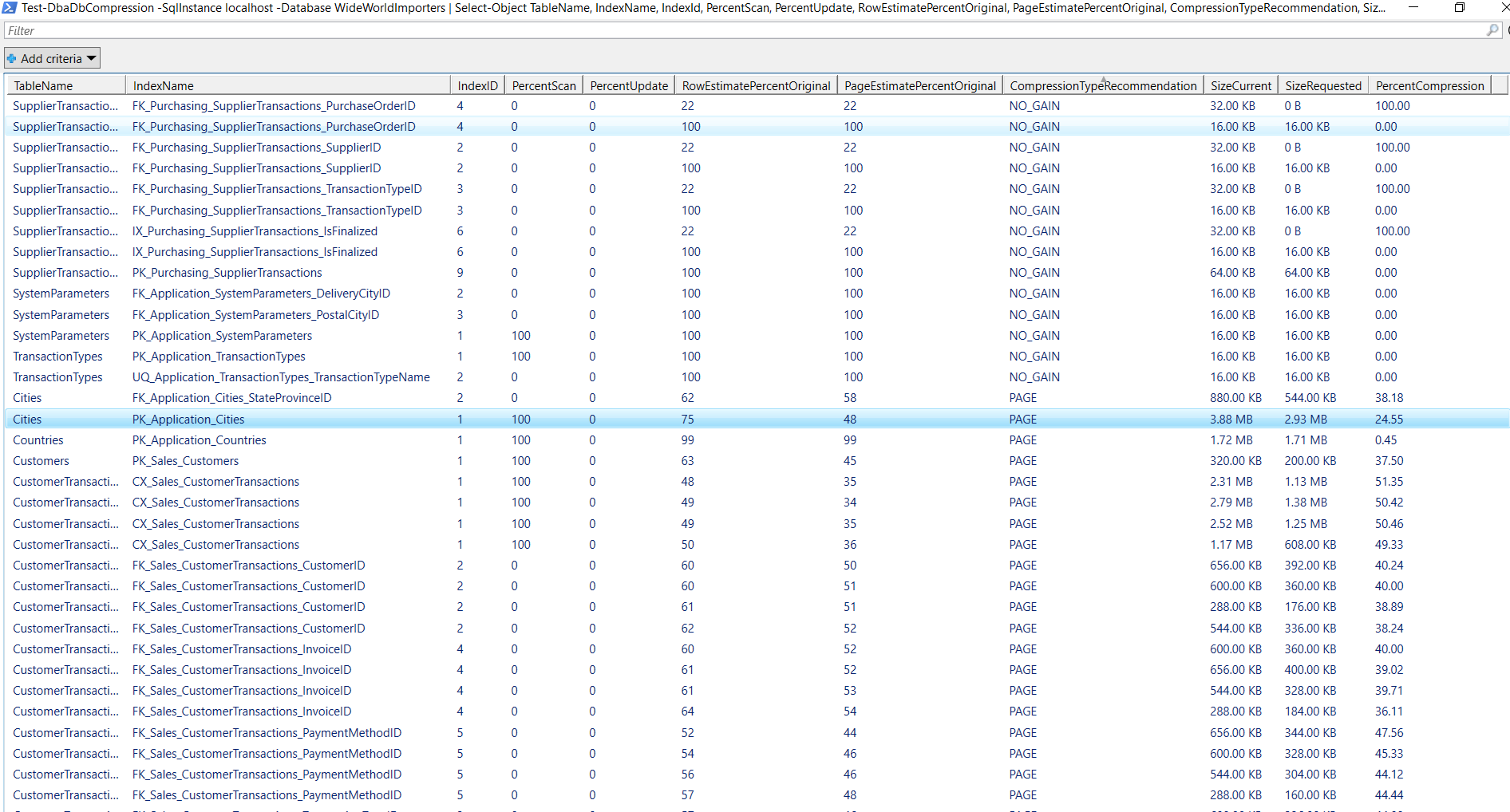

9 of the non clustered indexes on this table were read from less than once per day but were written to up to 18,000 times per day. The servers had been online for 180 days at the time of checking and some of the index uses were in single or double figures. These needed to be dropped as they were not bringing anything to the party.

I got this info by running sp_blitzindex and comparing the index usage stats against the server uptime. (I took care to run on all production servers for all customers, including read only secondaries).

Combine similar indexes

This left 16 remaining non clustered indexes to review.

- 9 of which had been used several million times with a maximum of 32 Million seeks.

- 5 out of those 9 appeared to be heavily overlapping other indexes.

- I needed to see if these can be combined into 1 or 2 indexes.

- 4 out of those 9 are fairly unique.

- Those 4 will be retained.

- 5 out of those 9 appeared to be heavily overlapping other indexes.

- 7 have been used hundreds of thousands of times and don’t appear to have much overlap.

This leaves us with 4 non clustered indexes being retained, 9 being dropped and 12 to be reviewed in more detail. This is the most difficult stage of the process as I need to decide which indexes can be merged and which can be dropped. Its always best to be cautious with this part, because removig the wrong index could slow down an important process.

Of the 12 indexes that needed a more detailed look, I decided to drop 8, and create 3 new ones. A net reduction of 5 indexes for this stage. Multiple groups of indexes had the same keys but different include columns. Some of the include column ranges were very wide and included large data types such as large NVARCHARs. Replacement indexes used the same keys, in the same order, but had a more targeted range of include columns.

Perfection? No. Better? Yes.

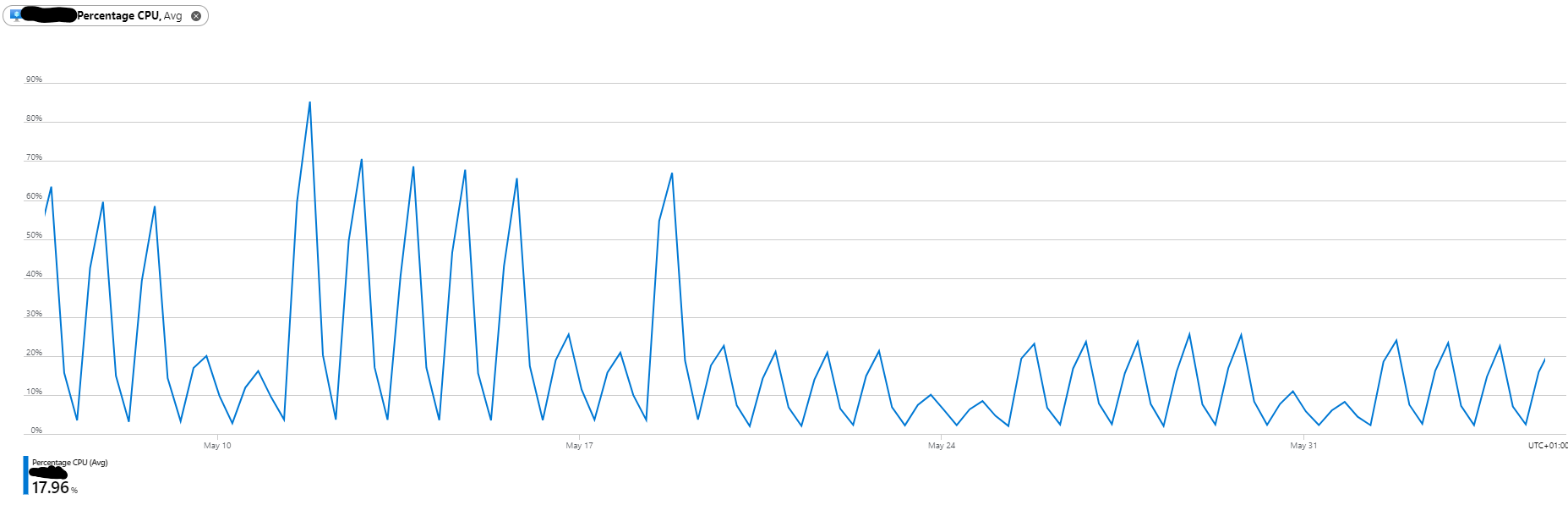

In total, we have a net reduction of 14 non clustered indexes. It still leaves us with 11 non clustered indexes. Your SQL Server indexes can’t always be perfect but 11 is better than 25. I’d really have loved to get that count down to 5 but it is a lot better than it was. This process should be repeated after a month of the new index structure being in place with the aim of further consolidation if possible. The new indexes won’t be perfect, some queries may not be served as well by the new consolidated indexes or by having a rarely used index removed but I was trying to improve the overall health of the SQL Server. Reducing the number of writes that were hammering the storage is a step in the right direction.

How did we get so many indexes?

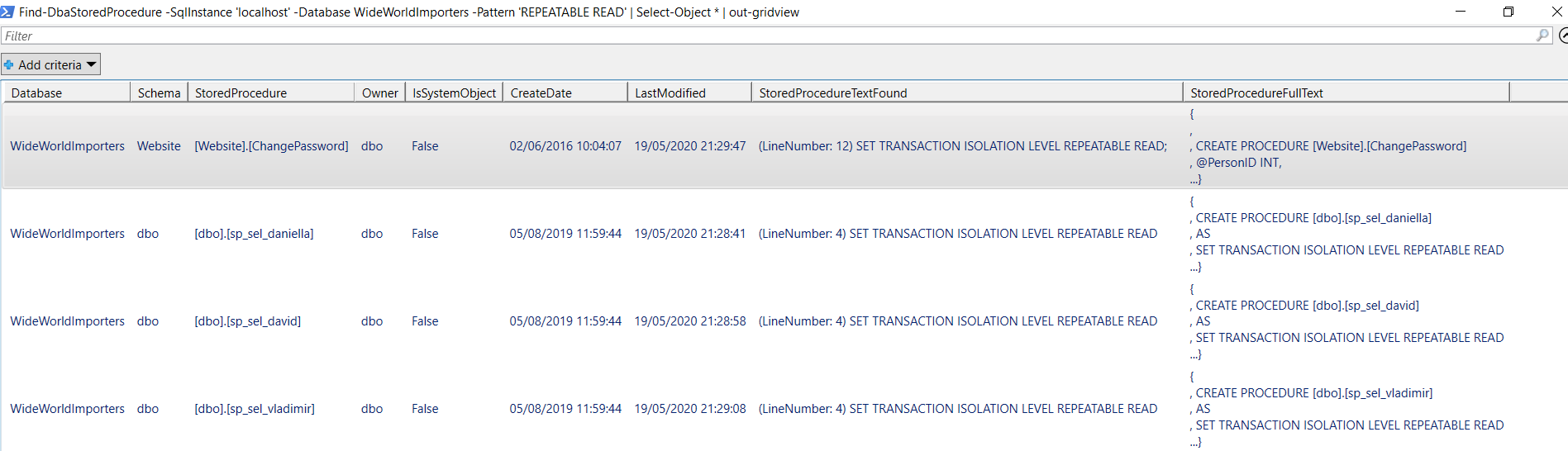

Index creation was all developer led so when new functionality was rolled out, indexes were created for each new process. This resulted in a lot of overlapping indexes. I don’t blame the developers, there should just have been a process for reviewing index requests, and comparing them to what we already had.

How to avoid in future

I see two key approaches to helping avoid a repeat of this death by indexing.

- Don’t hoard the analysis. Sharing the results with the development team will help increase awareness of this death by indexing scenario.

- Implementing an approval process for pull requests where a DBA should be in the approvers list if the change includes a new index.

Verify improvements

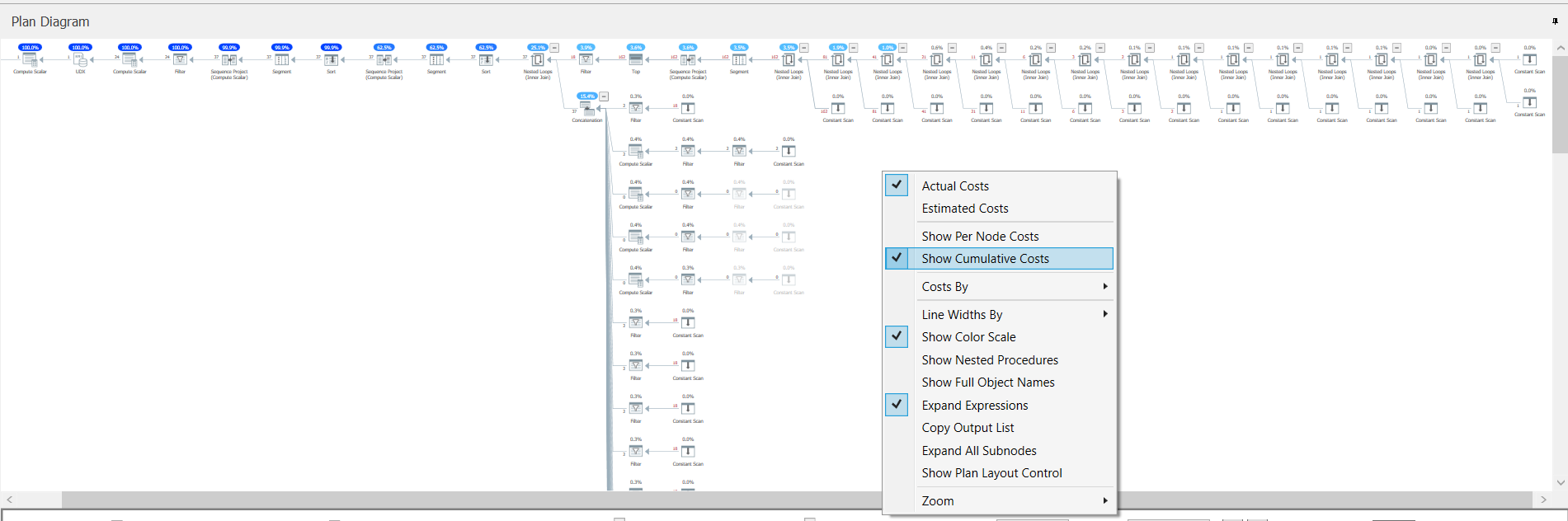

Index changes can affect multiple queries all using the same table so just testing one query or stored procedure is not enough to release with confidence. Where possible, use a load testing environment which mirrors production, and replay a repeatable workload. By baselining a typical workload, then making the changes and taking a new benchmark, you can measure the improvements overall and for specific database calls.

With this client, there was a load testing environment with synthetic API calls to the application that attempted to mirror production. Not everyone has this luxury when testing but capturing and replaying a workload, and measuring the differences is vital for this type of performance tuning. It can help you catch any serious regressions as well as giving you the confidence that your performance will improve.

If you don’t alreqady have a suitable load testing environment, the tool I recommend to get you going is an open source project called WorkloadTools by Gianluca Sartori.