To test read intent connections to an AG Listener, I prefer to use SQLCMD but you can also test easily using SSMS.

SQLCMD

The -Kreadonly switch is your key to success here but remember to also specify the database using -d. When not set (and with an initial catalog of master for my login), I found I always got the primary instance back during my check. This simple omission cost me hours of troubleshooting work, because I was convinced my listener wasn’t working correctly. In fact, I just wasn’t testing it correctly.

Read/Write Connections

-sqlcmd -S "SQL01-AG1-list" -d WideWorldImporters -E -q "SELECT @@SERVERNAME;"

Read only Connections

-sqlcmd -S "SQL01-AG1-list" -d WideWorldImporters-E -q "SELECT @@SERVERNAME;" -Kreadonly

The instance that you are connected to will show in the command prompt. Type exit to leave sqlcmd.

SSMS

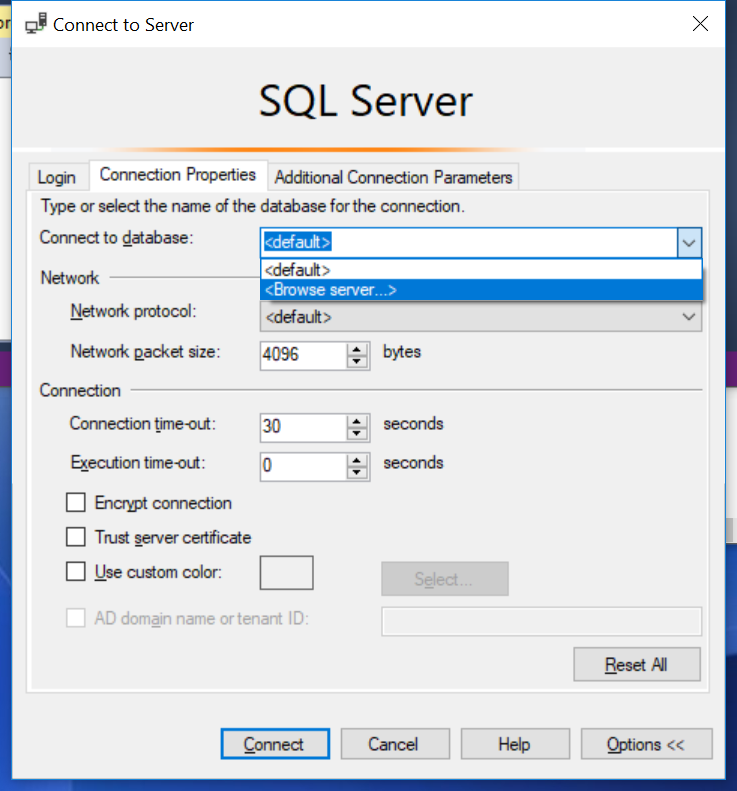

In object explorer, click Connect and choose Database Engine.

Then, in the bottom right hand side of the dialog box, click on Options >>

In Connection Properties, Click Connect to database and then <Browse server ..>. Choose a DB that is in your availability group.

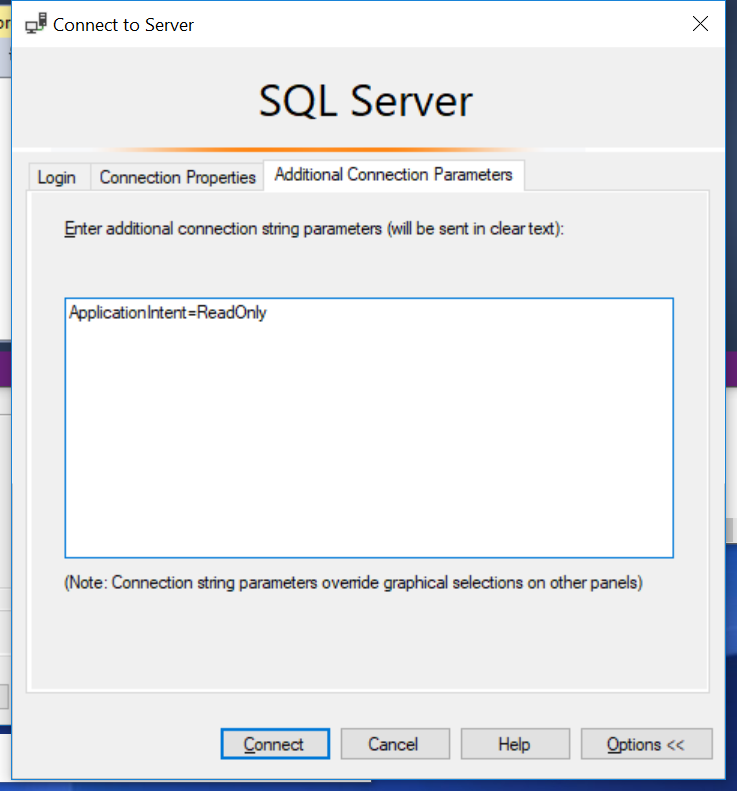

Then click on Additional Connection Parameters and type in ApplicationIntent=ReadOnly

Click connect and run SELECT @@SERVERNAMEand you should expect to see the instance name of your secondary replica, providing you have set up the read only routing correctly.

If you change connection and remove ApplicationIntent=ReadOnly from the Additional Connection Parameters, you should see the result as the name of your primary instance.

Summary

Hopefully these 2 simple techniques to test read intent connections to an AG Listener will be useful and help save you time. It’s a simple blog post but I wanted to write it because I was looking at the problem in too much depth and missing the obvious mistake of choosing my database context.