I spoke at the relaunch of the Glasgow Data User Group (formerly Glasgow SQL Server User Group).

I spoke at the relaunch of the Glasgow Data User Group (formerly Glasgow SQL Server User Group).

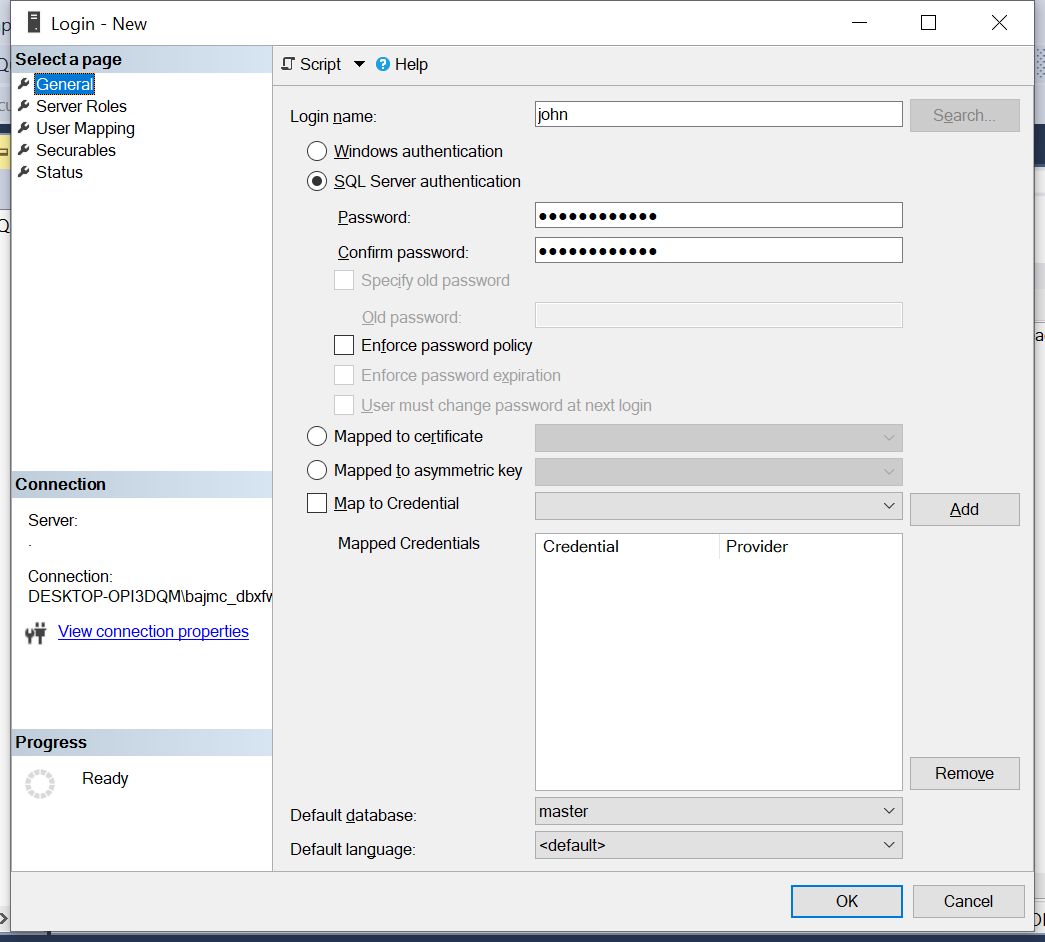

It was a great event, hosted by Craig, Louise and Robert. My presentation was on some useful free community tools, which can make your life as a DBA much easier. It shows that DBAs with zero budget to spend on expensive software still have a wide range of free software to make our lives easier. Moreover, even with a large budget, some of these free tools are still the best in their class so are worth reviewing.

Slides for tonight’s talk on SQL Server Community Tools are available on Github.

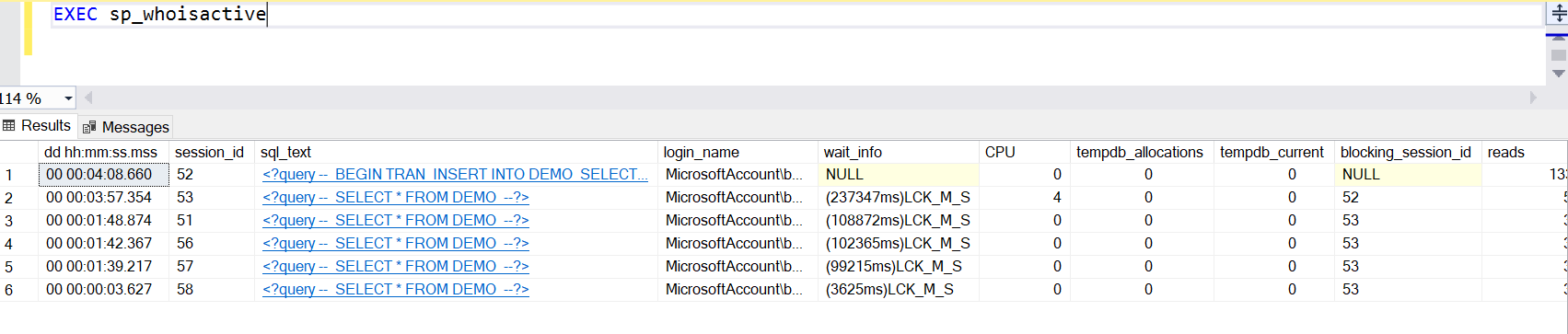

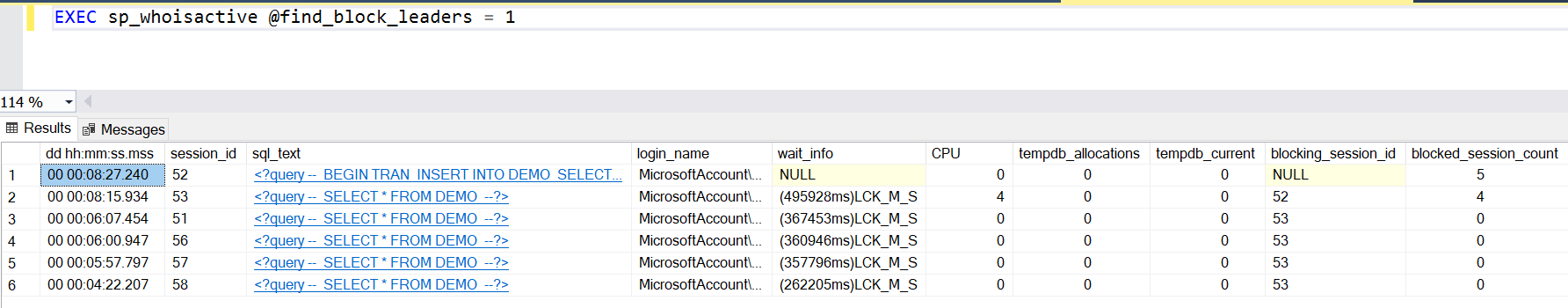

A quick note on killing spids. I really only recommend this if you know what the process is and you have an idea of how long it will take to rollback. (Remember those other 5 spids are still blocked until the rollback completes and this is a single threaded process)

A quick note on killing spids. I really only recommend this if you know what the process is and you have an idea of how long it will take to rollback. (Remember those other 5 spids are still blocked until the rollback completes and this is a single threaded process)

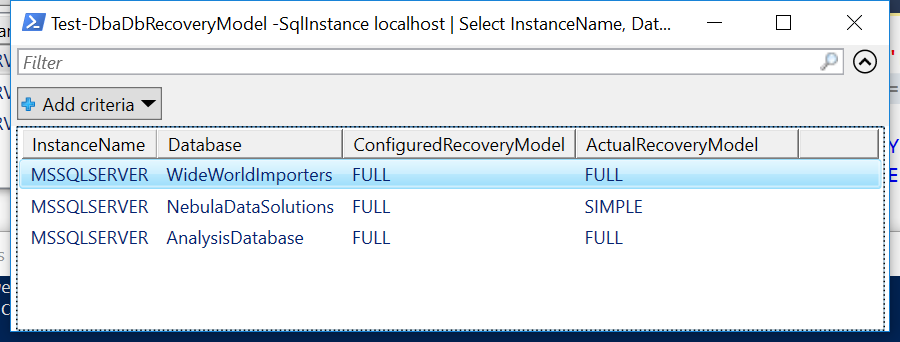

This post is about database recovery models for SQL Server databases. Having the correct recovery model for a database is crucial in terms of your backup and restore strategy for the database. It also defines if you need to do maintenance of the transaction log or if you can leave this task to SQL Server. Let’s look at the various recovery models and how they work.

This post is about database recovery models for SQL Server databases. Having the correct recovery model for a database is crucial in terms of your backup and restore strategy for the database. It also defines if you need to do maintenance of the transaction log or if you can leave this task to SQL Server. Let’s look at the various recovery models and how they work.