What happens when you store a space in a char(1) column?

I was asked by a colleague why his where clause wasn’t being selective when filtering on a space value. The column was a char(1) data type. To understand the curious case of the space in char(1), we need to understand how the char data type works and also a bit more about the need for it in this scenario.

Scenario

My colleague had built a user table which included a gender column. A char(1) was chosen because one letter could be used to record the gender of the user. It was also small which helped with performance and overall size of the table. All non specified or non binary genders were assigned a ‘ ‘. (A space) The assumption was that the space character would behave the same as an M or F when used in the SELECT clause.

How the char column works

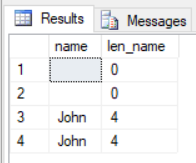

A char is fixed-length data type and any value that fall short of the fixed length is padded out with empty spaces. This means that inserting ” into the gender column has the same affect as entering ‘ ‘. This can be tested using the LEN function:

[sql]

DECLARE @tbl TABLE (name char(10))

INSERT INTO @tbl VALUES (”) — empty char(1)

INSERT INTO @tbl VALUES (‘ ‘) — a space in char(1)

INSERT INTO @tbl VALUES (‘John’)

INSERT INTO @tbl VALUES (‘John ‘)

SELECT name,LEN(name) as len_name FROM @tbl

[/sql]

The spaces do not affect the LEN of the value of the char column

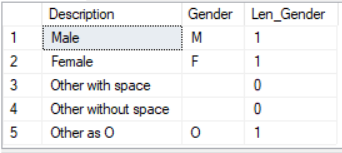

Build a test table – including putting a space in char(1)

[sql]

CREATE TABLE dbo.People (Description NVARCHAR (20),Gender CHAR(1))

INSERT INTO dbo.People(Description,Gender) VALUES(N’Male’, ‘M’)

INSERT INTO dbo.People(Description,Gender) VALUES(N’Female’, ‘F’)

INSERT INTO dbo.People(Description,Gender) VALUES(N’Other with space’, ‘ ‘)

INSERT INTO dbo.People(Description,Gender) VALUES(N’Other without space’, ”)

INSERT INTO dbo.People (Description,Gender) VALUES(N’Other as O’, ‘O’)

[/sql]

Query / Results

[sql]

— Select all rows and show the LEN of the gender column

SELECT

Description,

Gender,

LEN(Gender) AS Len_Gender

FROM dbo.People

[/sql]

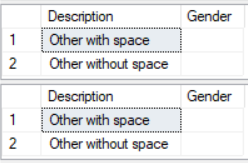

[sql]

— We get the same result with either query so the space is ignored

SELECT * FROM dbo.People WHERE Gender = ‘ ‘

SELECT * FROM dbo.People WHERE Gender = ”

[/sql]

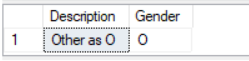

[sql]

— We get the row we want when we use a value such a O instead of a space

SELECT * FROM dbo.People WHERE Gender = ‘O’

[/sql]

Workarounds

The best option is use to a different character like a ‘O’ or something else suitable, anything other than a space in char(1) would work. If you weren’t too concerned about space used in this column, using a longer varchar data type would allow user to enter anything they wanted. Failing that, a TINY int would allow 256 options and still only uses 1 byte.

Its 2019

Yes, I know there a whole discussion about whether we should be storing gender and possible values but this is simply a post about using CHAR(1).

Further reading:

https://stackoverflow.com/questions/4175878/storing-sex-gender-in-database

Slightly tenuous link: https://johnmccormack.it/2016/02/how-does-the-sql-server-engine-process-my-select-query/

Maintenance of SSISDB within SQL Server

Maintenance of SSISDB within SQL Server

My presentation

My presentation