When your SQL Server is in big trouble

It’s so important to stay calm when your SQL Server is in big trouble. I’m talking about when blocking is through the roof, when CPU is pinned and people are yelling from all different directions. Staying calm isn’t just about a state of mind, you need to have a process that you work through, that you have practised and you know inside out.

How bad is it?

In this post, I want to describe what I call a priority 2 problem. It is serious and business impacting but the server is online. It needs immediate attention however or it could escalate to a P1.

P1 – Business critical system(s) are unavailable

P2 – Serious impairment of functionality on a critical system

P3 – Performance is slower than usual but requests are completing

P4 – Performance is generally sub optimal and should be improved

Get a colleague on comms

One thing that is guaranteed to happen is that people who are not directly involved in fixing the issue, will want updates. They have good reason to want updates and are well meaning, but the constant need to reply to emails or Teams messages will seriously impact the speed at which you are working.

Back in the good old pre-covid days of office working, this could easily be achieved by someone sitting near you and the two of you communicating verbally. With remote working being more and more common now, I recommend you have some kind of audio channel open that you can speak when you need to and silence is ok too. This could be a phone call on speaker, or a teams call. The technology isn’t the main thing here, the idea is that you can express updates vocally to someone capable of communicating with the wider organisation.

Where possible, your colleague should be technically capable of answering related questions. They should open a chat in Teams or Slack or whatever software your organisation prefers and provide regular updates there. They should answer questions, keep to the update schedule and most importantly, divert people away from you.

A pre practiced routine

Now the scenarios can be different, in this one I want to talk about how I would handle a SQL Server which appears to be functioning (barely) but is extremely slow. In other words, it is a priority 2 incident as defined above. Helpdesk supervisors are calling tech support to advise and customers are calling them as the website is unusable. On top of that, the MI team and data scientists are contacting the DBAs directly because their queries won’t run.

Have a script or checklist

In my script, I tend to use well established community stored procedures. The sort of ones that most DBAs know about and many use. If you start trying to write new queries in the middle of a slow down, that is going to cost you time. Stick with what works, what is established and what you have used before and know well.

I’ve mentioned these in previous posts but the main things I am going to run are:

- sp_whoisactive – https://github.com/amachanic/sp_whoisactive

- sp_blitzfirst – https://www.brentozar.com/first-aid/

- sp_blitzlock – https://www.brentozar.com/first-aid/

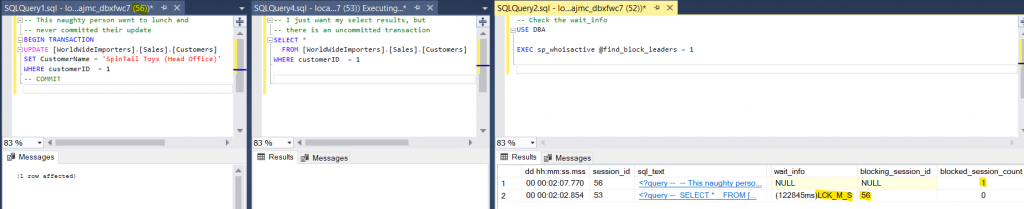

sp_whoisactive

I always run this first because it it designed specifically for showing you current database activity, and it has saved my bacon so many times in the past. Simply running this parameter free will show you what is running on your SQL Server right now. It is ordered by query duration descending so the long runners are at the top. It can give you blocking information, information on wait types and on open transactions as well. For advanced features, you need to use some parameters.

sp_blitzfirst

If you can find what you need with sp_whoisactive, you may not even need to use this stored procedure, Where this procedure comes into its own is it tells you when certain things have recently changed or when they out of the ordinary.

e.g. sp_blitzfirst will tell you if:

- The plan cache has been recently erased

- You have high CPU utilization from a program other than SQL Server

- How long each wait type has been waiting during the previous 5 seconds

If something stands out that you don’t understand, there will be data in the URL column that you can copy/paste into a web browser. The web page will give you an explanation of the problem.

sp_blitzlock

This proc is all about deadlocks. You might not need to run it if the first two have given you enough information to fix the issue in hand. However, if deadlocks are at the root of your problems, this will tell you which queries are involved and when. It’s also easier to read than deadlock graphs.

There are more things that I’ll run in the fullness of time but we’ll leave them for later. I only want to focus on these 3 things for now to zero in on the immediate problem.

The script

USE dba

/*

To run this, you also need to install:

- sp_whoisactive

- sp_blitzfirst

- sp_blitzlock

*/

-- Raises an error if you run the whole script in error

RAISERROR ('Dont run it all at once',20,-1) WITH LOG

-- sp_whoisactive with various parameters

EXEC sp_whoisactive @find_block_leaders = 1 --,@get_locks = 1

EXEC sp_whoisactive @sort_order = 'sql_text' -- Tells at a glance if you have a lot of the same query running. For the F5 report runner troublemakers

-- What has been hurting us in the last 5 seconds. Look for wait stats, and anything out of the ordinary, such as the plan cache has been recently erased.

EXEC dbo.sp_BlitzFirst @expertmode = 1

-- Are we experiencing deadlocks

EXEC sp_BlitzLock

-- Deadlocks in last hour

DECLARE @StartDateBlitz datetime = (SELECT DATEADD(HH,-1,GETDATE())), @EndDateBlitz DATETIME = (SELECT GETDATE())

EXEC sp_BlitzLock @EndDate = @EndDateBlitz, @StartDate = @StartDateBlitz

GO

/* Some other things to consider

Have the usual optimisation jobs run as expected. Stats/indexes etc

If one proc has regressed badly, could it help to clear only that plan from the cache or to recompile it.

EXEC sp_blitz -- Although not as handy as the others for real time issues. Better used as a health check

EXEC sp_blitzcache -- More useful for helping you identify the resource hungry queries, allowing you

EXEC sp_readerrorlog 0,1, 'memory'

*/

The calm after the storm

I will dedicate a full post to this however for now, it is important to say that slow queries or resource intensive queries should be identified and added to a backlog for fixing. If one particular query is likely to go bad again, it should be treated as a priority to fix, in order that we don’t see the same issue escalating again.

You should also do a SQL Server Health Check in order to satisfy that you don’t have an sub optimal configuration causing you issues.