On Sunday morning, I sat and passed exam DP-300 which gives the certification of Microsoft Certified: Azure Administrator Associate. I’ve been sitting Microsoft exams for a number of years now but this was my first for a while. I wanted to jot down what thoughts still remained in my head following the exam.

Preparation

I prepared for the exam by following the course from ACloudGuru. I’m a big fan of this site as it has helped me learn so much about AWS; so I thought I would trust it for Azure learning as well. I have to admit I had been going through the course at a snail’s pace and by the time of completion, I had forgotten some of things I had learned initially. I sat their exam simulator and got 77% which was not a pass.

I realised from doing the exam simulator that there were some areas where my knowledge was weaker, specifically on some newer features I hadn’t worked with day to day and also migration options for the likes of Postgres and MariaDB.

I wrote down each wrong answer, learned why it was wrong and what was correct. I resat the test and got 87%. I repeated the process and got 89%. A lot of the questions were repeating by now and I felt ready to sit the exam.

Sitting the DP-300 exam

The exam itself was MUCH harder than the exam simulator, however in fairness, the exam simulator was pushing you to achieve 80% which allowed a bit of a buffer come exam day. (Microsoft only look for 700/1000). There are some areas I think the simulator could have covered more extensively and a bigger bank of questions would have been helpful.

However when it came to the exam, I managed to rely on my experience to pull some old knowledge out of the darkest recesses of my brain, and made a few educated guesses. Thank fully I passed with 800+. Not the best score but the exam itself is pass or fail.

Focus areas for sitting DP-300

I can’t be too specific here due to NDA but I would suggest if you are an experienced SQL DBA but have limited or no recent Azure working experience, some areas to focus on are:

- PAAS vs IAAS

- Remember PAAS needs less administrative effort than IAAS or on premises. Look for clues in the question.

- Business Critical and Premium vs General Purpose and Standard

- Azure SQL Server IaaS Agent Extension

- Database mail for managed instance

- Migration options (Online and offline) – And not just for SQL Server

- Encryption vs Dynamic data masking

- Query store

- Key Vault

- Some Azure networking like Virtual network and VPN

- Know your performance DMVs and how to diagnose blocking and slow executing queries

- Backup of system DBs

What’s next

I’m still deciding but it’s given me the bug after a couple of years of not doing much active learning. So I think AZ-104 to help me re-establish some Azure infrastructure knowledge or DP-203 to introduce me to some parts of Data engineering that I’m looking to learn.

This DP-900 Exam prep reading list comes from Microsoft Learn. Links will be added as I go through the course material / suggested reading on the Microsoft Learn site until I have completed the full course. I am currently working my way through the material.

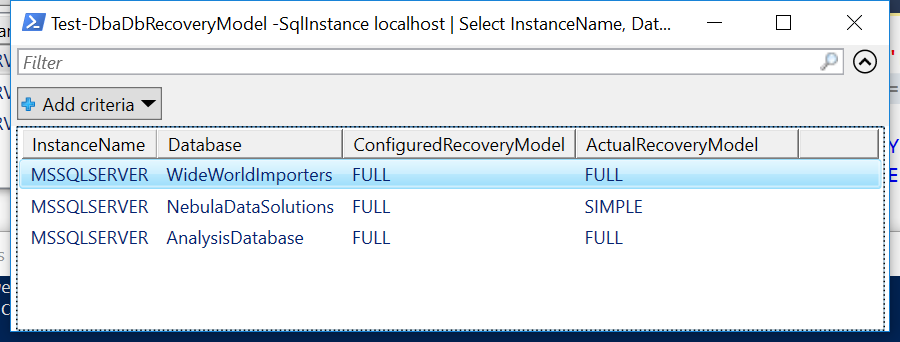

This DP-900 Exam prep reading list comes from Microsoft Learn. Links will be added as I go through the course material / suggested reading on the Microsoft Learn site until I have completed the full course. I am currently working my way through the material. This post is about database recovery models for SQL Server databases. Having the correct recovery model for a database is crucial in terms of your backup and restore strategy for the database. It also defines if you need to do maintenance of the transaction log or if you can leave this task to SQL Server. Let’s look at the various recovery models and how they work.

This post is about database recovery models for SQL Server databases. Having the correct recovery model for a database is crucial in terms of your backup and restore strategy for the database. It also defines if you need to do maintenance of the transaction log or if you can leave this task to SQL Server. Let’s look at the various recovery models and how they work.