Securing your SQL Server RDS instance

Learn how to use security groups to restrict access and create logins, users and roles above and beyond the standard admin user.

SQL Server Databases and Cloud

Learn how to use security groups to restrict access and create logins, users and roles above and beyond the standard admin user.

For this month’s T-SQL Tuesday, Andy Leonard (b|t) asks How Do You Respond When Technology Changes Under You?

It’s a great topic and inspired me to write a T-SQL Tuesday post this month. I’ve missed the last couple due to a combination of just not feeling like it, and the previous topics weren’t best suited to me. Hopefully this can inspire me to get writing again.

When I got my start with SQL Server, I was just that – A SQL DBA. I worked as a junior member of a big team, we had a large SQL Server estate and I just wanted to learn to become as experienced as the senior folk. I was able to learn and be mentored and was enjoying making progress. Then I heard we were going to start using “The Cloud”. Not only that but I was going to be responsible for the operation of our Kafka clusters, EMR and ElasticSearch.

A lot of these technologies were developer led, they knew why they wanted to use them and from an operations perspective, it was about making sure things didn’t fail, and errors were handled. I had no background in them, and didn’t really understand them well. Simply learning to use PuTTY to log into a linux VM was one of my early successes.

The move to the cloud, in this case AWS was going ahead with or without me so I had to embrace it. Of course, I learned and collaborated with colleagues and got to an acceptable standard but it was only when I decided to do AWS certifications that things really took off. I found that by studying for the AWS Cloud Solutions Architect certification that I got an appreciation for all cloud services, not just the data ones like RDS. Studying for the AWS certifications improved my networking skills; I learned about object vs block storage and of course I learned all of the different data products (often managed services but not always).

I approached the certification process from a genuine perspective of learning the technology well, rather than just passing the exam. Simply passing the exam does not do much for you. Even if it gets you a job, you will likely be found out pretty quickly.

A further benefit to learning one cloud technology well is that when we started using Azure, I found the concepts were the same. I understood the networking, the security groups and PaaS vs IaaS. It made this transition much easier and meant that when I moved to a job that was predominantly based in Azure, I didn’t have the huge knowledge gap that I would have had, if I had not done my AWS certifications.

So for me, a structured learning approach is best. It’s not for everyone but I am certainly glad that my job is no longer just “SQL DBA”.

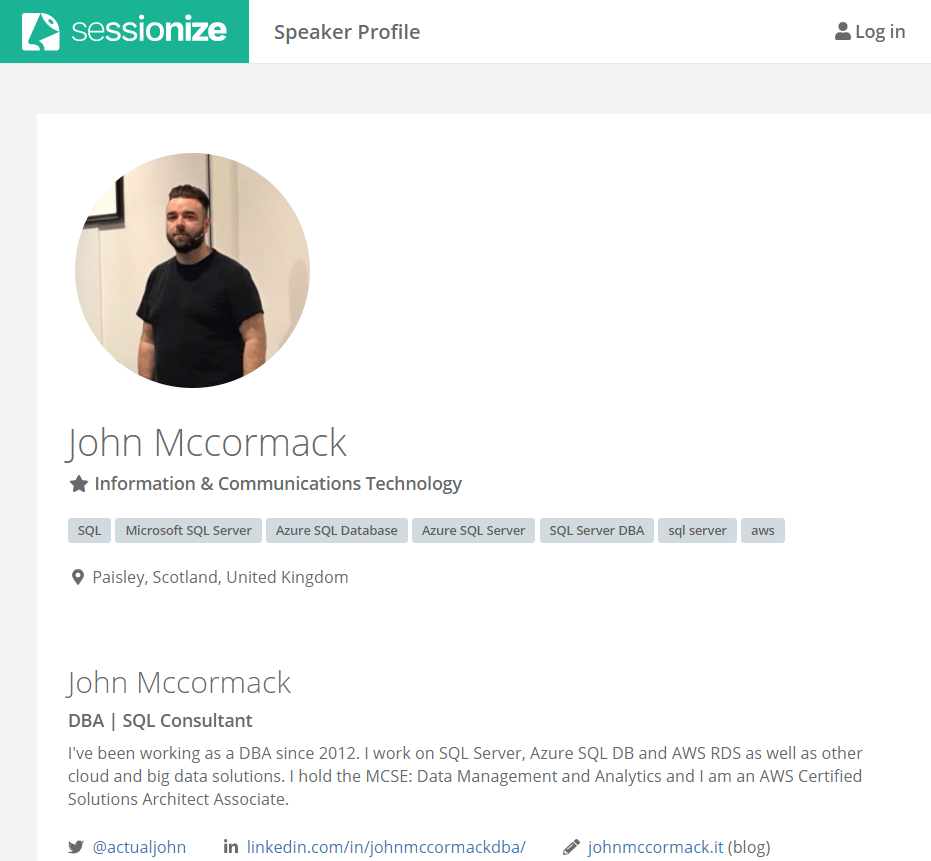

Take a look at my Sessionize speaker’s profile. I talk about data and cloud topics, and have done at some major SQL conferences and local user groups. Please get in touch if you’d like me to speak at your event. My live sessions are listed.

Sometimes, WordPress mail can be a little flaky. If I don’t reply to you, please send me a DM on twitter.

My final tip in my series 7 ways for data teams to save money in Azure is to delete unused instances (and orphaned storage) to save money in Azure.

It’s easy to spin up instances in Azure, especially for developers if you haven’t implemented a tight permissions policy. Pet projects and Proof of Concepts (POCs) get created regularly and the person involved needs to remember to delete all resources or else you will be charged indefinitely.

Even services which can be stopped and deallocated such as Virtual Machines will cost you money if they are not deleted. Why? If you don’t delete unused instances in Azure, you still pay for all of the associated costs including the storage which can be significant. Moreover, you need to specifically delete your storage when your delete those VMs or you’ll keep paying for that too. That is known as orphaned storage or unattached storage, and it is not cheap.

Microsoft published a PowerShell script to find unattached storage in azure which you can run to list out anywhere it occurs in your subscriptions.

I would encourage developers and engineers to use a resource group for POCs. This way, when it is done, the whole resource group can be obliterated in one go, leaving nothing behind.

Once you have got rid of all your old unused instances and orphaned storage, you may want to prevent team members from doing the same thing again. This is where a combination of Azure policies and tagging comes in handy. You can set policies to prevent new services being spun up without the appropriate tags. This Microsoft tutorial explains how to manage tag governance with Azure Policy.

Your SQL Server indexes can’t always be perfect. What I mean by that is you can’t index for every query on a busy SQL server. In this post I’m going to describe just one table from a server I have been tuning recently. It had 26 indexes, and that was not unusual for this database. This most likely happened over time as a result of developers creating an index per query using the table, as well as following some perceived best practices (with the best of intentions).

Yes, one of the heavily used tables on this database had 26 indexes. It had one clustered index on an ID column which is fairly standard. There were 25 further non clustered indexes including an index per foreign key. A shared code base runs across several customers. Some customer’s use all the functionality, some only use parts of it, but they all get the same database schema. And all of those indexes.

9 of the non clustered indexes on this table were read from less than once per day but were written to up to 18,000 times per day. The servers had been online for 180 days at the time of checking and some of the index uses were in single or double figures. These needed to be dropped as they were not bringing anything to the party.

I got this info by running sp_blitzindex and comparing the index usage stats against the server uptime. (I took care to run on all production servers for all customers, including read only secondaries).

This left 16 remaining non clustered indexes to review.

This leaves us with 4 non clustered indexes being retained, 9 being dropped and 12 to be reviewed in more detail. This is the most difficult stage of the process as I need to decide which indexes can be merged and which can be dropped. Its always best to be cautious with this part, because removig the wrong index could slow down an important process.

Of the 12 indexes that needed a more detailed look, I decided to drop 8, and create 3 new ones. A net reduction of 5 indexes for this stage. Multiple groups of indexes had the same keys but different include columns. Some of the include column ranges were very wide and included large data types such as large NVARCHARs. Replacement indexes used the same keys, in the same order, but had a more targeted range of include columns.

In total, we have a net reduction of 14 non clustered indexes. It still leaves us with 11 non clustered indexes. Your SQL Server indexes can’t always be perfect but 11 is better than 25. I’d really have loved to get that count down to 5 but it is a lot better than it was. This process should be repeated after a month of the new index structure being in place with the aim of further consolidation if possible. The new indexes won’t be perfect, some queries may not be served as well by the new consolidated indexes or by having a rarely used index removed but I was trying to improve the overall health of the SQL Server. Reducing the number of writes that were hammering the storage is a step in the right direction.

Index creation was all developer led so when new functionality was rolled out, indexes were created for each new process. This resulted in a lot of overlapping indexes. I don’t blame the developers, there should just have been a process for reviewing index requests, and comparing them to what we already had.

I see two key approaches to helping avoid a repeat of this death by indexing.

Index changes can affect multiple queries all using the same table so just testing one query or stored procedure is not enough to release with confidence. Where possible, use a load testing environment which mirrors production, and replay a repeatable workload. By baselining a typical workload, then making the changes and taking a new benchmark, you can measure the improvements overall and for specific database calls.

With this client, there was a load testing environment with synthetic API calls to the application that attempted to mirror production. Not everyone has this luxury when testing but capturing and replaying a workload, and measuring the differences is vital for this type of performance tuning. It can help you catch any serious regressions as well as giving you the confidence that your performance will improve.

If you don’t alreqady have a suitable load testing environment, the tool I recommend to get you going is an open source project called WorkloadTools by Gianluca Sartori.