AZCopy for SQL Backups and other stuff

AZCopy is a useful command line utility for automating the copying of files and folders to Azure Storage Account containers. Specifically, I use AZCopy for SQL Backups but you can use AZCopy for copying most types of files to and from Azure.

In this blog post example (which mirrors a real world requirement I had), the situation is that whilst I need to write my SQL backups over a network share, I also want to push them up to Azure Storage (in a different region) to allow developers quicker downloads/restores. This is why I need to use AZCopy. If I only needed my backups to be written to Azure, I could have used BACKUP TO URL instead.

Authentication

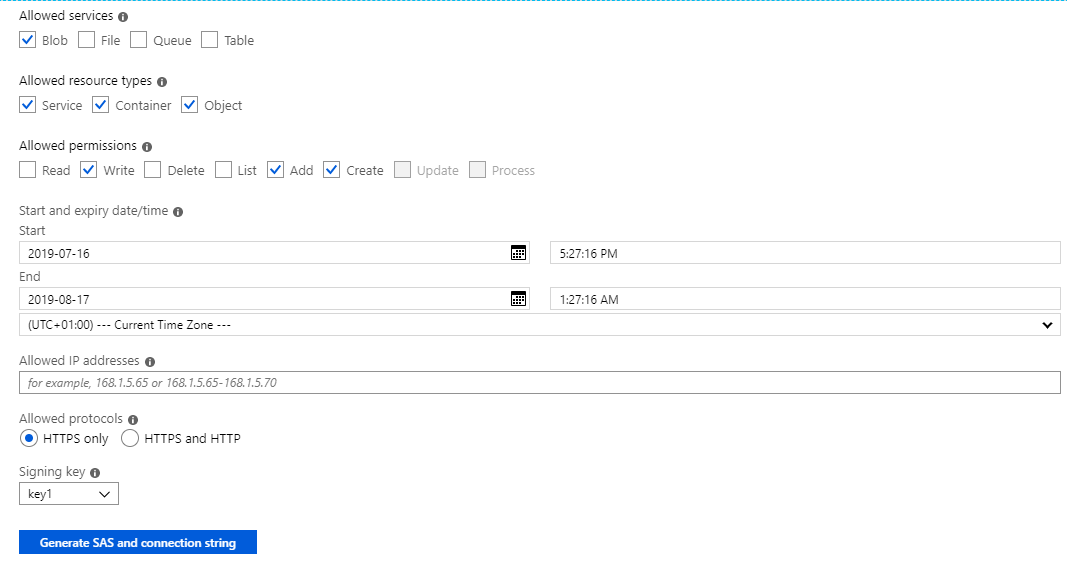

I use Shared Access Signatures (SAS token) to authorise access to the storage account container. You can create an SAS token for any Access key by clicking Generate SAS and connection string button from storage accounts/Shared Access Signature.

For this scenario, my SAS token is limited to write / add / create operations and cannot be used to read or delete data. This is just to limit the scope for security reasons, but you can decide whatever meets your needs.

SAS tokens have an expiry date. I’ve set mine to expire on 16th August 2019 so I will need to create a new token at this time and update my AZCopy command if I want to continue with the automated copying via a scheduled script.

Command

Storage Account: johnbox Container: instance1

The basic copy command is simple. An example is:

[shell]azcopy cp “<source location>” “<destination location>”[/shell]

For using an SAS token, we append this to the destination url:

[shell]azcopy cp “C:\temp\johns_backup.bak” “https://johnbox.blob.core.windows.net/instance1?sas-token”[/shell]

However, the azcopy sync option is more efficient because it won’t force a copy over the network if the source and destination already match. The example below also syncs the full folder, not just a specific file.

[shell]azcopy sync “C:\temp” “https://johnbox.blob.core.windows.net/instance1?sas-token” [/shell]

Schedule

I chose to schedule this in a sql agent job. The job step is directly after the step where I back up to my network share. You could also use windows task scheduler if you prefer.

If you haven’t used AZCopy yet?

Download AZCopy from https://docs.microsoft.com/en-us/azure/storage/common/storage-use-azcopy-v10. The page also gives instructions on how to run and authenticate AZCopy commands.

If you have time, please have a look at my other Azure posts.

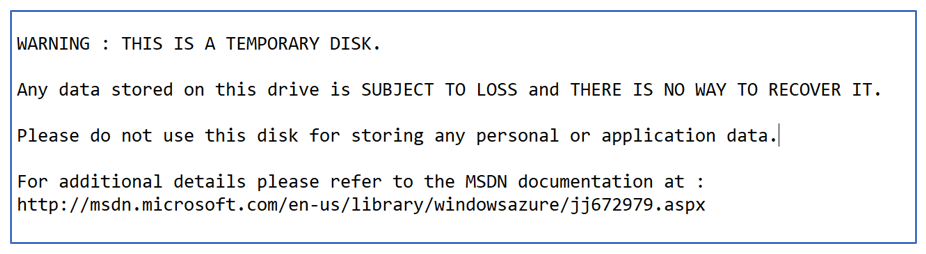

Tempdb files on D drive you say?

Tempdb files on D drive you say?