This post is part of the October 2020 edition of #tsql2sday. Thanks to Rob Volk for hosting (blog|twitter) and for coming up with a great topic. “Data Analogies, or: Explain Databases Like I’m Five!” I genuinely can’t wait to read some of the responses. I think it’s going to be a very educational series of posts. I’ve chosen to explain how an availability group listener works by using an analogy.

This post is part of the October 2020 edition of #tsql2sday. Thanks to Rob Volk for hosting (blog|twitter) and for coming up with a great topic. “Data Analogies, or: Explain Databases Like I’m Five!” I genuinely can’t wait to read some of the responses. I think it’s going to be a very educational series of posts. I’ve chosen to explain how an availability group listener works by using an analogy.

What is an availability group listener – An Analogy

Let’s say you run a business and have multiple phone numbers. You may have an office number, a mobile number and a fancy 0800 number to give your business the impression of being much bigger, like your national competitors. You put the 0800 number on your van, website, all of your advertising and your business cards. You’re not actually a national scale business though and you don’t have a receptionist to handle the calls into your 0800 number. So what happens to those calls?

They are routed through to your preferred number, usually your office number, but you can change it to your mobile number when you are out of office. You could even set a preference for it to try to route the call to your office first, then try your mobile phone if the office is unavailable. Customers that have your mobile number or office number can still call you directly on those but they will not be rerouted if either of those phones are unavailable. If you change your mobile number, you can just update the routing to use your new number and the customer is unaware of the change.

What is an availability group listener – A slightly (but not much) deeper dive

What’s in a name

Like the analogy above, the AG listener uses an address that can route SQL Server connections to a primary or secondary replica within an availability group. It includes a DNS name which is unique within a domain, an IP address or several, and a listener port designation.

Connections to SQL Server where availability groups are in use should use the listener name instead of the server name. This means that if any replica in the AG is unavailable, connections will just be routed to the available replicas, meaning no loss of service to the client. You can utilise your SQL Server resources more evenly if using a listener because there is an option to direct certain connections to a readable secondary replica. You can also offset backups to secondary replicas. All of this spreads the load more evenly across available replicas. If you just route everything through the primary, it can be overworked whilst your secondary replicas are doing next to nothing. The secondary replicas still need to be fully licensed so it is a shame, and a waste of money for them to sit by idly.

Port

If you’re looking for simplicity, you should designate the listener port as 1433, as no specific port declaration is required in the connection string. However, if you need to, you can designate a different port, but must include it in your connection string.

Seemless

If your primary replica becomes unavailable and you have automatic failover enabled, it means that your old secondary replica can switch seemlessly to becoming the new primary. No new connections will notice a thing.

Spread the load

If you have more than one secondary, you can spread the load across a set of readable secondaries. This means that all replicas are taking a share of the workload. Prior to SQL Server 2016, only one preferred replica would receive all of the of the read intent traffic.

Thanks again to Rob for coming up with an innovative topic.

John

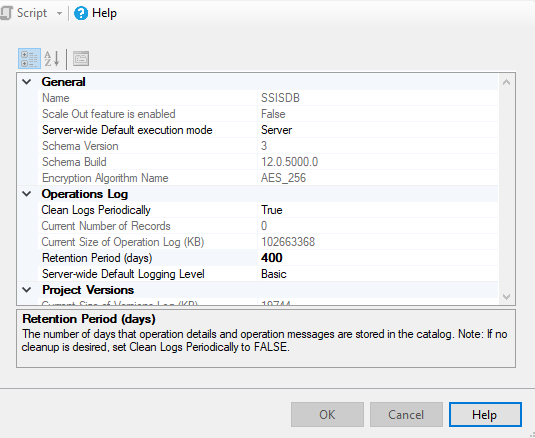

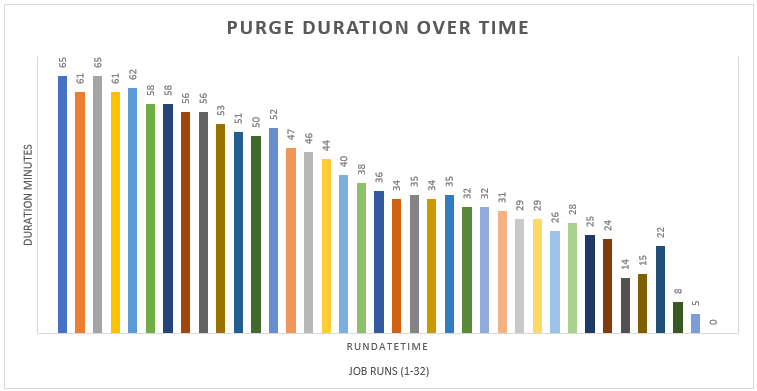

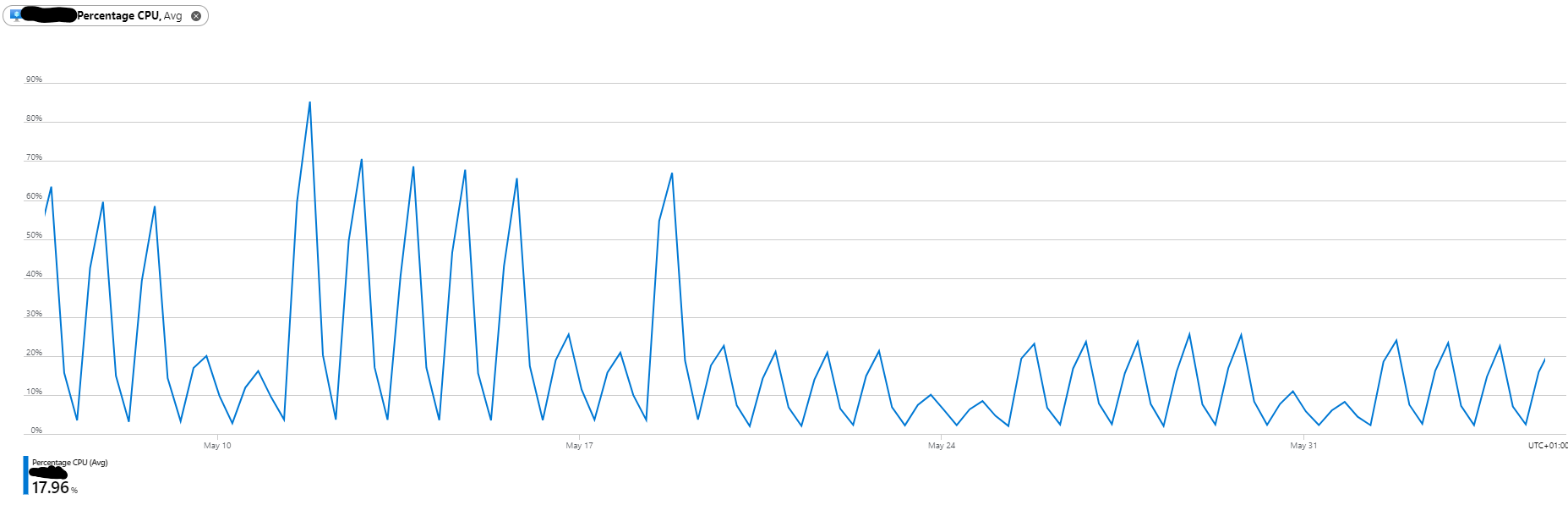

Maintenance of SSISDB within SQL Server

Maintenance of SSISDB within SQL Server