Change a user’s resource class within Azure Synapse Analytics | A solution

This post shows a method for allowing developers to change a user’s resource class within Azure Synapse Analytics | Azure SQL Data Warehouse without granting them membership of the db_owner fixed database role.

Scenario:

I was a approached by a data warehouse developer who wanted to be able to take advantage of the feature in Azure Synapse Analytics (Formerly Azure SQL Data Warehouse) called ‘Resource classes’. Resource classes allow users in certain roles to have access to extra compute resources for their queries. This can increase their max memory per query (although there is an overall trade-off on concurrency). Unfortunately, due to this being managed via roles, the person looking to change resource classes needs to be in the db_owner role so they can manage database role membership. As I didn’t want to add the developer to the db_owner role, a different solution was required.

Solution:

A separate sql user can be added to the db_owner role on the SQL Data Warehouse in question. We can then create a credential in azure automation based upon this user. Finally, we create a Runbook which executes db_addrolemember (but only allows a specific few roles related to resource groups). This means we can give an end user the ability to add/remove people by granting them access to run the runbook.

How to:

Create SQL login/user

-- Create sql login CREATE LOGIN [dev_dba_automation] WITH PASSWORD = 'S3cur£P@ssword!' -- Change into DWH context, not master CREATE USER [dev_dba_automation] WITH DEFAULT_SCHEMA=[dbo] -- FYI -- ALTER ROLE X ADD MEMBER Y doesn't currently work with Azure SQL DWH

Create Azure Automation Credential

From your automation account, find the Credentials option (under shared resources) on the left hand menu.

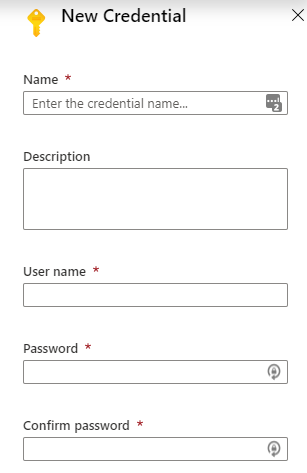

Then create your new credential, using the newly created sql login name as the user name and then add the correct password.

Please note if the end user has privileged access to Azure Automation with a role such as owner or contributor, they could use the credential to carry out other tasks in the data warehouse so please be careful about who will have access to creating new runbooks.

Create Runbook

- Log into Azure Automation and create a new runbook.

- Copy this Powershell code in.

<#

.DESCRIPTION

This runbook allows the person running it to add a database user to a

database role on dev-datawarehouse

.PARAMETER Database

String name of the SQL Server database to connect to

.PARAMETER databaserole

String name of the database role you wish to add a member to

.PARAMETER rolemember

String name of the user you wish to add to the database role

.PARAMETER add_or_drop

String Must = add for db_addrolemember or drop for sp_droprolemember

.NOTES

AUTHOR: John McCormack

LASTEDIT: Dec 16, 2019

#>

param(

[parameter(Mandatory=$True]

[string] $Database,

[parameter(Mandatory=$True]

[string] $databaserole,

[parameter(Mandatory=$True]

[string] $rolemember,

[parameter(Mandatory=$True]

[string] $add_or_drop

)

# Variables

$SqlCredentialAsset = 'dev_az_automation'

$SqlServer = 'dev-datawarehouse.database.windows.net'

$SqlServerPort = 1433

$SqlCredential = Get-AutomationPSCredential -Name $SqlCredentialAsset

if ($SqlCredential -eq $null)

{

throw "Could not retrieve '$SqlCredentialAsset' credential asset. Check that you created this first in the Automation service."

}

# Get the username and password from the SQL Credential

$SqlUsername = $SqlCredential.UserName

$SqlPass = $SqlCredential.GetNetworkCredential().Password

# Define the connection to the SQL Database

$Conn = New-Object System.Data.SqlClient.SqlConnection("Server=tcp:$SqlServer,$SqlServerPort;Database=$Database;User ID=$SqlUsername;Password=$SqlPass;Trusted_Connection=False;Encrypt=True;Connection Timeout=30;")

# Open the SQL connection

$Conn.Open()

# Run sp_addrolemember as the credential user

#$Cmd=new-object system.Data.SqlClient.SqlCommand("EXEC sp_addrolemember @rolename = $databaserole, @membername = $rolemember", $Conn)

if($add_or_drop -eq "add")

{

$Cmd=new-object system.Data.SqlClient.SqlCommand("EXEC sp_addrolemember @rolename = $databaserole, @membername = $rolemember", $Conn)

}

else

{

$Cmd=new-object system.Data.SqlClient.SqlCommand("EXEC sp_droprolemember @rolename = $databaserole, @membername = $rolemember", $Conn)

}

$Cmd.CommandTimeout=120

if ($databaserole -Like "*rc*")

{

# Execute the SQL command

$Ds=New-Object system.Data.DataSet

$Da=New-Object system.Data.SqlClient.SqlDataAdapter($Cmd)

[void]$Da.fill($Ds)

# We should look at adding some logging for this

WRITE-OUTPUT $rolemember $add_or_drop"ed to database role:$databaserole."

}

else

{

WRITE-OUTPUT "Invalid role"

}

# Close the SQL connection

$Conn.Close()

- Run the workbook and observe the output.

Further reading

- https://docs.microsoft.com/en-us/azure/sql-data-warehouse/resource-classes-for-workload-management#example-code-for-finding-the-best-resource-class

- https://docs.microsoft.com/en-us/sql/t-sql/statements/alter-role-transact-sql?view=sql-server-ver15#examples

- https://gallery.technet.microsoft.com/scriptcenter/How-to-use-a-SQL-Command-be77f9d2

- (Base script I adapted)

I left what I considered to be a great permanent job to become a DBA contractor in Glasgow. It was one where I could pick some of my own projects, work with new and interesting technology and work in a team which collaborated well, liked each other and were good at sharing knowledge.

I left what I considered to be a great permanent job to become a DBA contractor in Glasgow. It was one where I could pick some of my own projects, work with new and interesting technology and work in a team which collaborated well, liked each other and were good at sharing knowledge.